Import the comma delimited file accessories.csv – Importing comma-delimited files (.csv) into systems is a common task across various domains. Understanding the process, file structure, data mapping, and error handling is crucial for successful data integration. This guide delves into the intricacies of importing comma-delimited files, providing a comprehensive overview of the techniques and best practices involved.

The structured format of comma-delimited files facilitates efficient data exchange between different software applications and systems. The delimiter (comma) plays a vital role in separating data elements, ensuring data integrity during the import process. Various methods exist for importing comma-delimited files, including manual techniques and automated tools.

1. Import Process Overview

Importing a comma-delimited file (.csv) involves transferring data from the file into a destination system, typically a database or software application. This process typically consists of the following steps:

- Load the .csv file into the system using appropriate software or tools.

- Map the data from the file to the corresponding fields in the destination system.

- Validate the imported data to ensure accuracy and completeness.

- Handle any errors or issues that may arise during the import process.

Various software and tools are available for importing .csv files, including spreadsheet applications (e.g., Microsoft Excel, Google Sheets), database management systems (e.g., MySQL, PostgreSQL), and specialized data import tools.

2. File Structure and Format

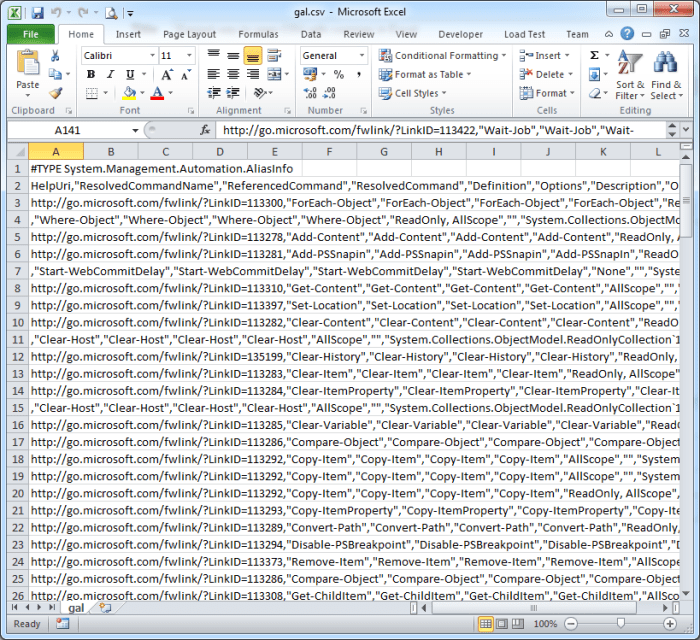

A comma-delimited file is a text file where data is organized into rows and columns, with each column separated by a comma (,) character. The first row of the file typically contains the column headers, which define the names of the data fields.

Subsequent rows contain the actual data values.

The delimiter (comma) serves as a separator between data fields, allowing the system to distinguish between different pieces of information in the file.

3. Data Import Methods

There are several methods for importing a .csv file into a system:

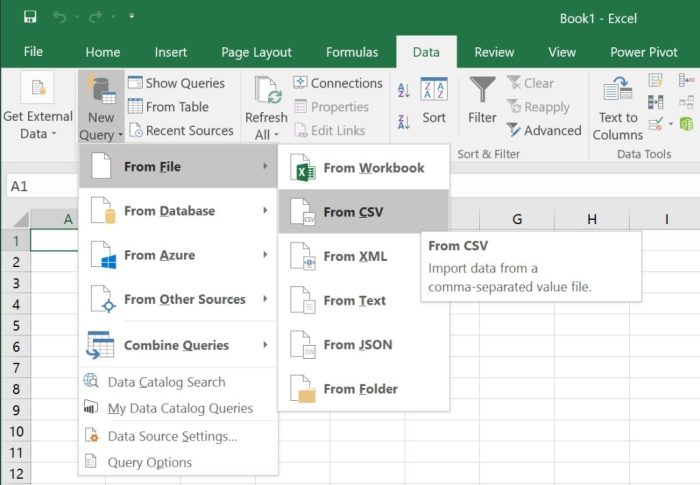

Using Spreadsheet Applications

- Open the .csv file in a spreadsheet application.

- Select the data range to be imported.

- Use the import feature of the application to load the data into the destination system.

Using Database Management Systems

- Establish a connection between the database and the .csv file.

- Execute an import command to load the data from the file into the database.

- Specify the mapping between the data fields in the file and the corresponding columns in the database.

Using Data Import Tools, Import the comma delimited file accessories.csv

- Select a data import tool that supports .csv files.

- Configure the tool to specify the source file, destination system, and data mapping.

- Run the import process to transfer the data.

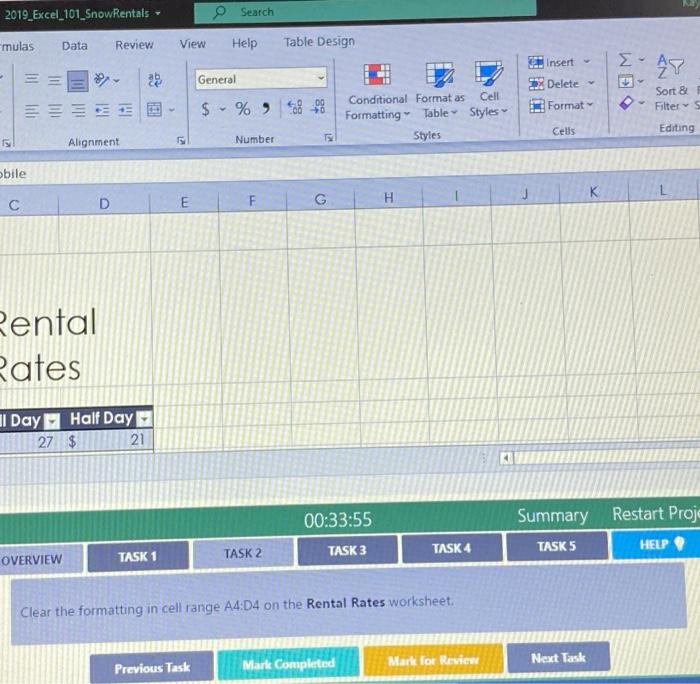

4. Data Mapping and Validation

Data mapping involves matching the data fields in the .csv file to the corresponding fields in the destination system. This ensures that the data is imported into the correct locations.

Data validation is crucial to ensure the accuracy and completeness of the imported data. This can be done by:

- Checking for missing or invalid values.

- Verifying data types and formats.

- Comparing the imported data to the original source.

5. Error Handling and Troubleshooting

During the import process, errors may occur due to various reasons, such as:

- Invalid file format or data structure.

- Incorrect data mapping or validation rules.

- Connection issues between the system and the data source.

To troubleshoot these errors, it is important to:

- Review the error messages and identify the cause.

- Verify the file structure, data mapping, and validation rules.

- Check the system logs for additional information.

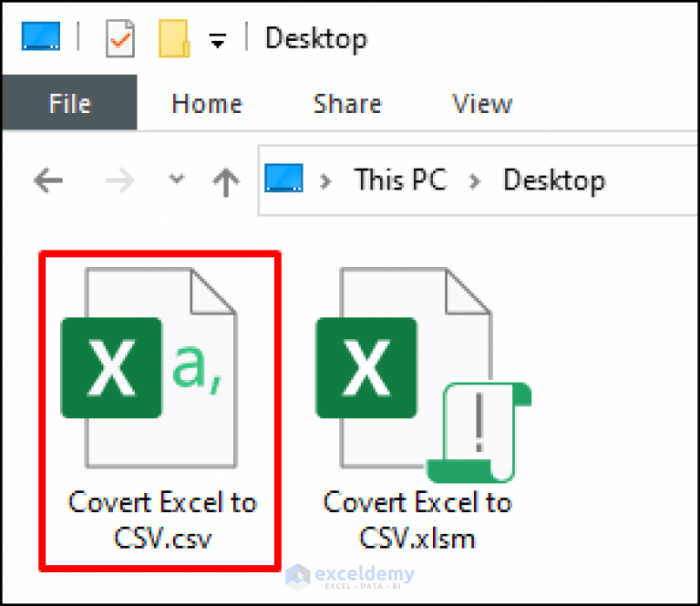

6. File Manipulation and Transformation: Import The Comma Delimited File Accessories.csv

Before importing the .csv file, it may be necessary to manipulate or transform the data to meet the requirements of the destination system. This can involve:

- Cleaning and formatting the data.

- Converting data types or formats.

- Sorting or filtering the data.

Tools or scripts can be used to automate these tasks and streamline the import process.

7. Performance Optimization

Optimizing the performance of the import process can reduce import time and improve data throughput. This can be achieved by:

- Using efficient data import methods and tools.

- Batching data imports to reduce the number of connections and queries.

- Indexing the destination system to improve data retrieval speed.

8. Advanced Techniques

For complex data import scenarios, advanced techniques can be employed, such as:

Incremental Data Import

Importing only the changes or updates since the last import, reducing the amount of data transferred and improving performance.

Handling Large Data Sets

Using specialized tools or techniques to import large data sets efficiently, such as parallel processing or streaming.

Custom Data Parsing and Formatting

Creating custom scripts or tools to parse and format data according to specific requirements, ensuring compatibility with the destination system.

Helpful Answers

What are the benefits of using comma-delimited files?

Comma-delimited files offer several benefits, including ease of data exchange, compatibility with various software applications, and efficient storage and retrieval of structured data.

How can I handle large comma-delimited files?

To handle large comma-delimited files, consider using specialized tools or techniques such as incremental data import, data partitioning, or parallel processing.

What are common errors that can occur during the import process?

Common errors include incorrect data formatting, missing or duplicate data, data type mismatches, and file size limitations. Careful data validation and error handling mechanisms are essential to mitigate these issues.